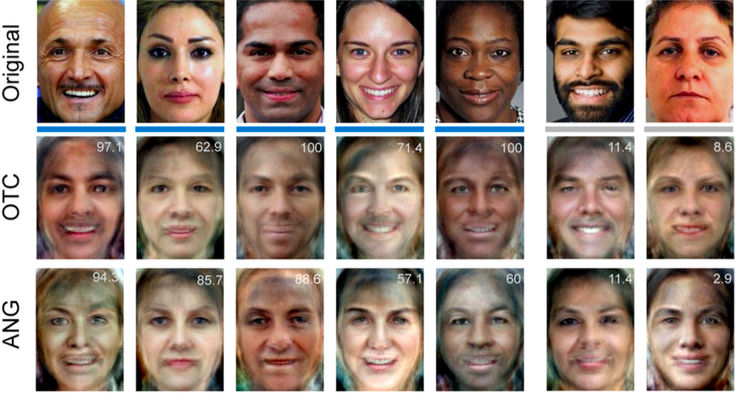

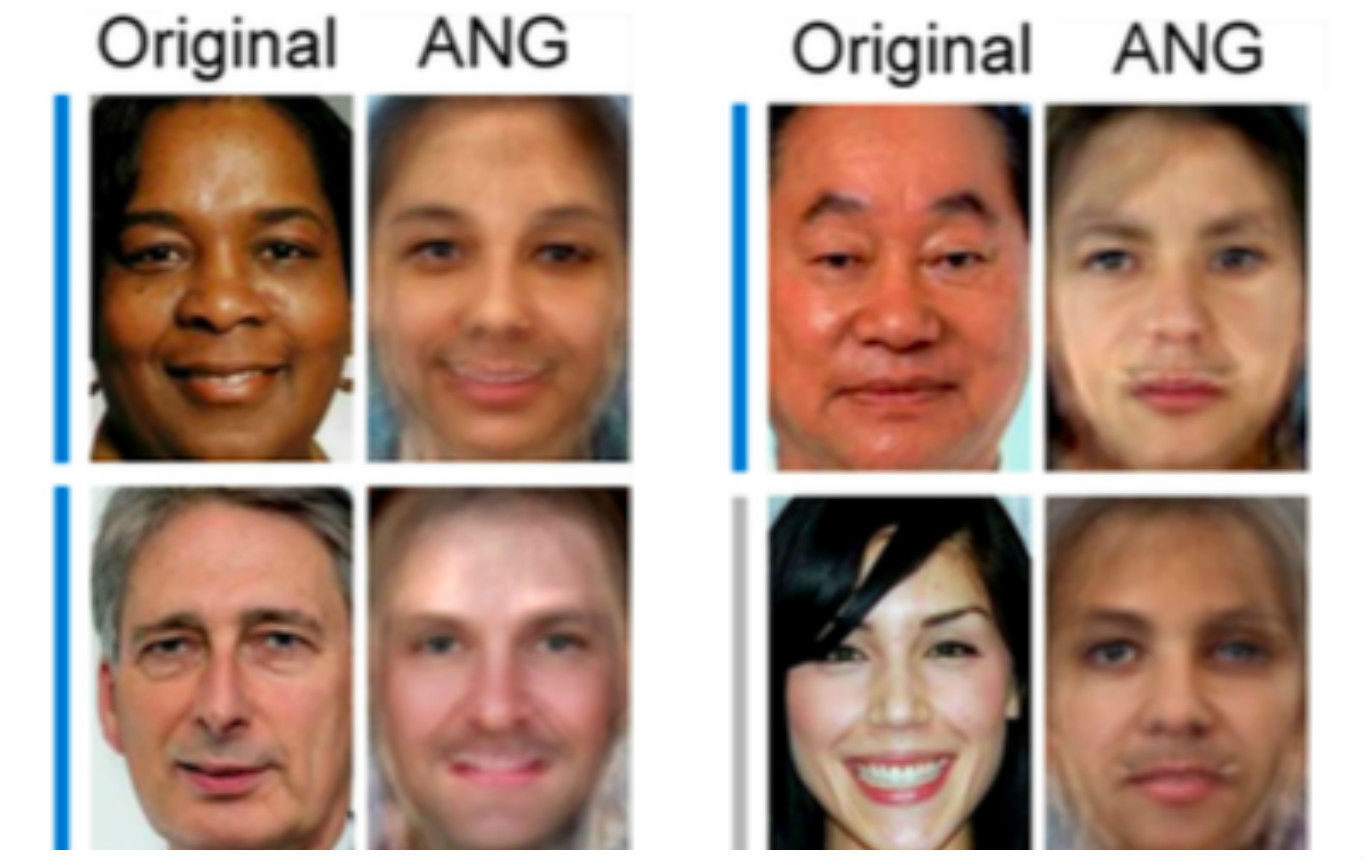

The study, led by Brice Kuhl and Hongmi Lee from the University of Oregon, used artificial intelligence (AI) that analysed brain activity in an attempt to reconstruct one of a series of faces that participants were seeing. It’s not an exact science, but the AI did get close. “We can take someone’s memory – which is typically something internal and private – and we can pull it out from their brains,” Kuhl told Vox. “Some people use different definitions of mind reading, but certainly, that’s getting close,” Kuhl told Vox. Kuhl and his colleague Lee recently published a paper in The Journal of Neuroscience with a conclusion straight out of science fiction: Kuhl and Lee created images directly from memories using an MRI, some machine learning software, and a few hapless human guinea pigs. Kuhl’s method involves putting test participants in an MRI who are then shown several hundred faces. The program had access to real time MRI data from the machine, as well as a set of 300 numbers that described each face. They covered everything from skin tone to eye position. As an MRI detects the movement of blood around the brain, the assumed conclusion was that equals brain activity – so the program analysed these movements of blood in reaction to these different points to learn how a particular brain reacts to known stimuli. The AI was put to the test with a few hundred examples incorporated into its algorithm. The participants were again shown a face, but this time the program didn’t know anything about the numbers describing it. The only thing it had to go on was the MRI data that described brain activity as the person saw the face. The AI learns to match blood flow over time, which explains the brain activity with facial features seen by the subjects in the MRI machine, spluttering out rough images of what the subjects are seeing. The resulting images have a dream-like quality, with blurred edges and shifts in skin tone. The images look like the kind of vague renderings a person might see in their own brain. But the machine has the ability for getting the facial expressions right, and other features — an upturned nose, an arched eyebrow — seem to translate faithfully from mind to machine. To provide some specific numbers data on the program’s success rate, Kuhl and Lee showed the strange reconstructions to another set of participants and asked them questions relating to skin tone, gender and emotion. As you would have thought, the group responded correctly that described the original faces at a higher rate than random chance, which proves the AI interpretations provide relatively accurate data. “[The researchers] showed these reconstructed images to a separate group of online survey respondents and asked simple questions like, ‘Is this male or female?’ ‘Is this person happy or sad?’ and ‘Is their skin colour light or dark?’

To a degree greater than chance, the responses checked out. These basic details of the faces can be gleaned from mind reading.” Kuhl told Vox that the image fidelity can be improved with more sessions, as the AI collects more data and gains more experience in matching brain activity to the details of images seen by test subjects.

The team is now working on an even tougher task of getting their participants to see a face, hold it in their memory, and then get the AI to reconstruct it based on the person’s memory of what the face looked like. “I don’t want to put a cap on it,” Kuhl said. “We can do better.” He also made it clear that his machines can’t read people’s minds without their cooperation. “You need someone to play ball,” Kuhl said. “You can’t extract someone’s memory if they are not remembering it, and people most of the time are in control of their memories.” The study has been published in The Journal of Neuroscience.